Apache Flink: History Server

One of the problems we have faced running Apache Flink that we have a very limited windows for getting access to the details about failed / crashed jobs. The job details just disappear and cannot be retrieved especially form web front end. Here is how it looks like:

flink job details empty

Actually we are not that helpless. Apache Flink does support keeping the history of completed jobs. We can also retrieve it. But this is not available through regular web front end. This is available through a separate server called History Server. The server is available with the regular Apache Flink package download.

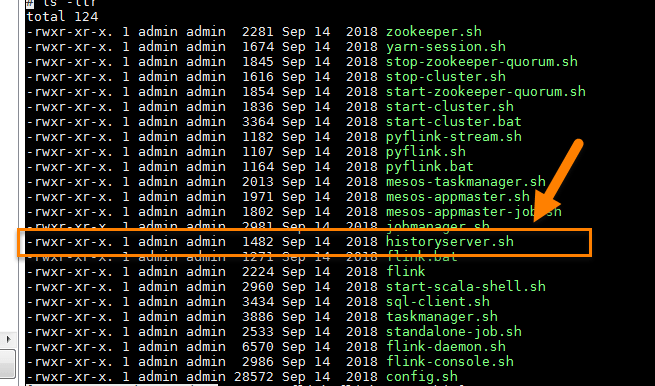

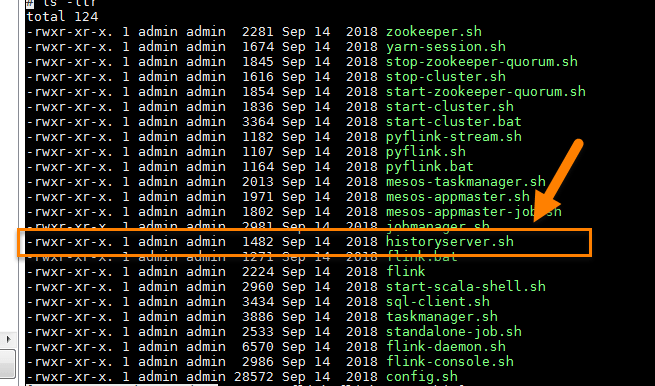

history-server-shell

How it works?

The history server needs a way to keep a track of all completed jobs by flink. They also include both failed jobs. Job Manager makes it easier for it. It pushes the details about the completed jobs in the specified folder in a JSON format with all details about the job including vertices, metrics, watermarks and exceptions.

Flink Storage

Web front end

The History server is available as both web front end and RESTful service. Here is the default view for the list of completed jobs. It just has one tab with details about completed jobs. The web front end is on the same port under # sub-path. So if your history server is running at http://myserver:9091 then then web front end is available at http://myserver:9091/#.

HistoryServer-web

RESTful Service

It is also available as a RESTful service. It can be accessed directly through http request. So in our case an example query can be curl http://myserver:9091/jobs/overview. We can query various end-points. The results are available in JSON format. All the end-points can be found here: [https://ci.apache.org/projects/flink/flink-docs-stable/monitoring/historyserver.html]

How to configure?

As we saw above, job manager must be configured with the same settings for completed jobs data as used by history server. Here is a sample configuration where we have used a sample file system folder to keep completed jobs data. You can see that we have used the exact same values for jobmanager.archive.fs.dir and historyserver.archive.fs.dir. You might want to use a distributed file system (hdfs) in your production environment.

The history server runs on port 8082 by default. It was conflicting with another service on my machine so I just changed the default value to 9091.

Running History Server

It’s simple to run this. You just need to use historyserver.sh in the bin folder of the downloaded package. This is a very simple shell script which uses flink-console.sh or flink-daemon.sh depending on how you want to run the server. For development purposes, you might want to try and see if the server is running successfully before starting it up as daemon.

In order to run it as daemon use start, otherwise, uses start-foreground to start the server. You can use stop switch to stop the server. It just assumes that you are using flink-daemon to run it and passes the relevant switches to stop it.

Possible Issues

While setting it up, you might run into some issues. Here are the two most common ones:

Missing Archive Directory

I missed setting the archive directory first time. Starting without setting the property results in the history server not being able to startup. You should get the following error:

Missing File Scheme

It is also required to specify the file scheme. I also missed it and got the following error: